Explore the world of Matching Transformers in AI: their workings, applications, challenges, and future developments in machine learning.

Introduction to Matching Transformers

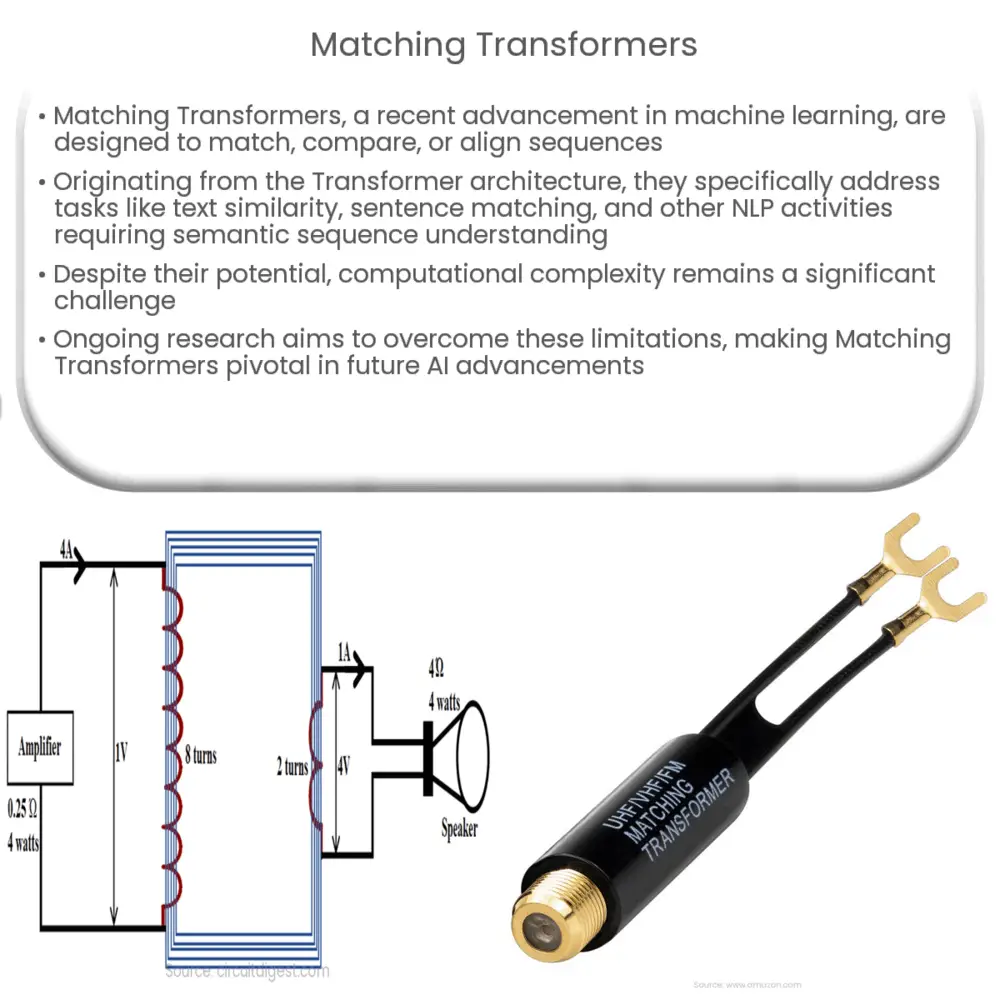

The concept of Matching Transformers, a recent development in machine learning and artificial intelligence, is an exciting and dynamic area of study. In essence, this involves the application of transformer models, an advanced mechanism for handling sequential data, in a way that is designed to effectively match, compare, or align sequences.

Understanding Transformers

Before we delve into Matching Transformers, let’s briefly look at what transformers are. Transformers are a type of neural network architecture, introduced in the paper “Attention is All You Need” by Vaswani et al. in 2017. They revolutionized the field of natural language processing (NLP) with their ability to handle long sequences of data and their scalability.

The Advent of Matching Transformers

Matching Transformers are a natural extension of the Transformer model, created to solve a particular set of problems – problems that involve comparing two sequences to each other. This type of transformer model shines in tasks like text similarity assessment, sentence matching, and other NLP tasks that require an understanding of the semantic similarity between two sequences.

Working Principle of Matching Transformers

Matching Transformers operate by comparing each element in one sequence with every element in the other sequence. It builds a representation of these relationships and uses this as a basis for the output sequence. This method stands in contrast to traditional transformer models, which typically operate on single sequences.

Applications of Matching Transformers

- Text Similarity: They can be used to identify how similar two given pieces of text are, which can be used for plagiarism detection, document matching, etc.

- Question Answering: Matching Transformers can be applied in question answering systems to match a question with potential answers in a given context.

- Chatbots: They can also be used to power conversational AI, matching user input to the most appropriate response in a database.

While the potential of Matching Transformers is enormous, they are not without their challenges. In the next section, we will dive deeper into these challenges and discuss possible solutions.

Challenges and Possible Solutions

Despite the numerous applications and potential benefits, implementing Matching Transformers presents its own unique set of challenges. One of the most significant hurdles is computational complexity. As the model compares each element of one sequence with every element in another, the processing requirement increases quadratically with the length of the sequences.

A potential solution to this could be the introduction of more efficient matching techniques, potentially inspired by computer vision methodologies. Additionally, pruning techniques and attention mechanisms can be used to focus computational resources on the most relevant parts of the sequence.

Future Developments

The future of Matching Transformers is indeed promising. Researchers continue to explore how to optimize the model and its matching mechanisms to improve computational efficiency, accuracy, and versatility. As these models become more sophisticated, they are expected to contribute significantly to advancements in various domains, such as healthcare, law, and customer service, to name a few.

Comparison with Other Models

In contrast to other models like LSTMs and GRUs, Matching Transformers demonstrate superior capability in handling long sequence matching tasks, primarily due to their unique matching mechanism. However, they can be more computationally intensive and might not always be the optimal choice depending on the specific task and available resources.

Conclusion

To sum up, Matching Transformers have revolutionized the field of sequence matching and comparison in natural language processing tasks. They offer unique capabilities for comparing sequences, opening up new avenues for applications. While they do present challenges, particularly in terms of computational demand, ongoing research is working towards mitigating these hurdles. The future of Matching Transformers, thus, holds immense potential, promising to drive the next wave of innovation in the world of artificial intelligence and machine learning.